Introduction

While everyday conversation and speech understanding is highly dependent on auditory perception, the use of visual cues also play an important role, especially in challenging listening conditions. Previous studies have shown that adding visual information can improve speech perception in noise. However, relatively little is known about how the addition of visual information during a speech perception task affects deployment of cognitive resources and listening effort.

This project addresses the question of how effort is differentially allocated in audiovisual and auditory-only environments. Funding bodies include the William Demant Foundation and Innovation Fund Denmark.

Aims

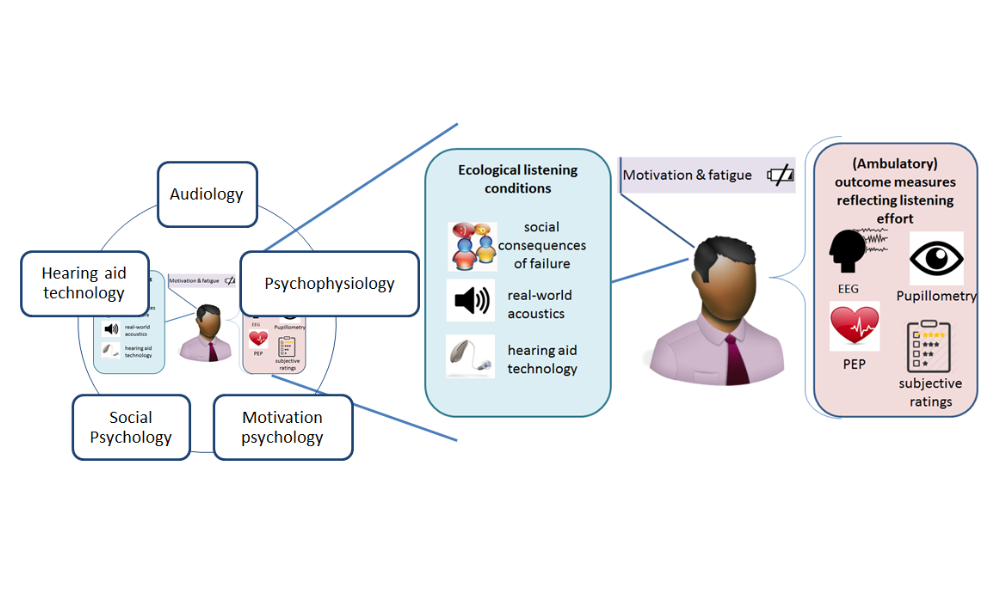

The project focuses on the study, design and validation of a new paradigm for measuring listening effort in an audiovisual environment using modern objective measures, namely electroencephalography (EEG) and pupillometry. By taking another step into the study of multisensory perception, findings from this project will provide a better understanding of real-life experiences of listeners through higher ecological validity. This project aims to extend the findings regarding listening effort and cognitive demands related to speech into the audiovisual domain, where listeners can leverage both auditory and visual cues.

Methodology

This project will use a newly developed sentence corpus (Danish Sentence Test, DAST) which has both validated auditory and audiovisual material. Listeners will be tasked to listen to both auditory and audiovisual speech across a range of noise levels. Behavioral scores, such as number of sentences repeated correctly, as well as EEG and pupillometry will be measured throughout the experiment. The first part of the project will focus on young and audiometrically normal hearing individuals before moving onto those with hearing impairment and to study the impact of hearing aid signal processing in a later part of the project.