Introduction

Try to picture yourself with your friends and family. You are at a restaurant, surrounded by other people enjoying their time there as well. You are chatting with your friends when your cousin joins in. The conversation changes between topics, like food or amusing stories, and you effortlessly follow along, shifting attention between them so that you can follow the conversation. This is enjoyable for most, but those with hearing problems find it challenging. They struggle to switch their attention between speakers, making conversations in noisy places like this difficult to follow.

Aims

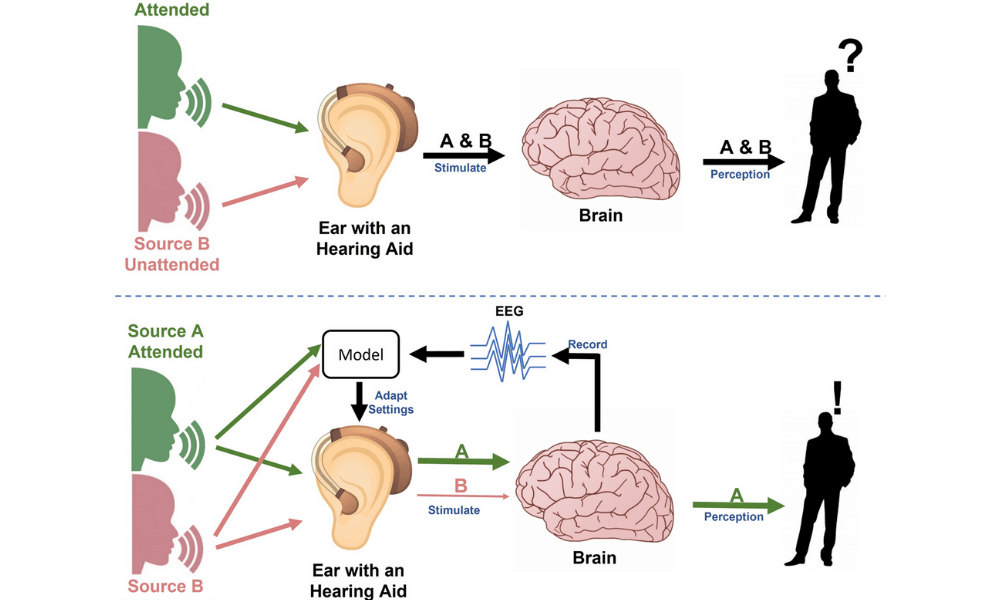

In our earlier research, we have shown that hearing aids (HAs) can help the brain by making relevant sounds louder and reducing background noise. Now, we want to understand if the brain not only registers the relevant sounds but also comprehends these. We’re studying how the brain processes language, at increasing levels of abstraction – from individual speech sounds, words, and sentences to meaning. By understanding this comprehension process, we hope to help people with hearing problems understand speech better, especially in noisy places. Our goal is to improve interventions like hearing aids, making it easier for users to grasp language amidst noise. This would greatly improve their social interactions.

Methodology

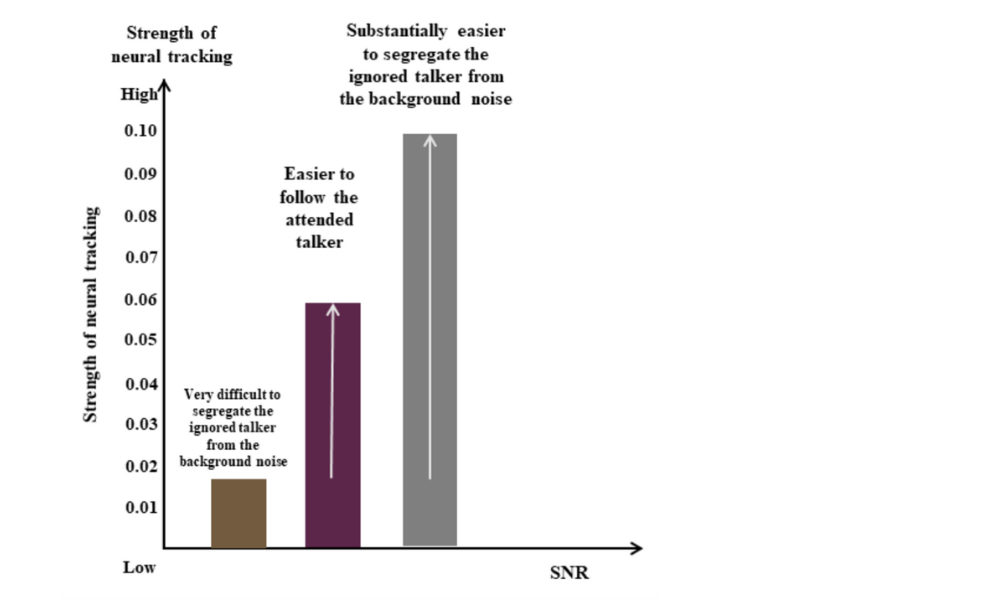

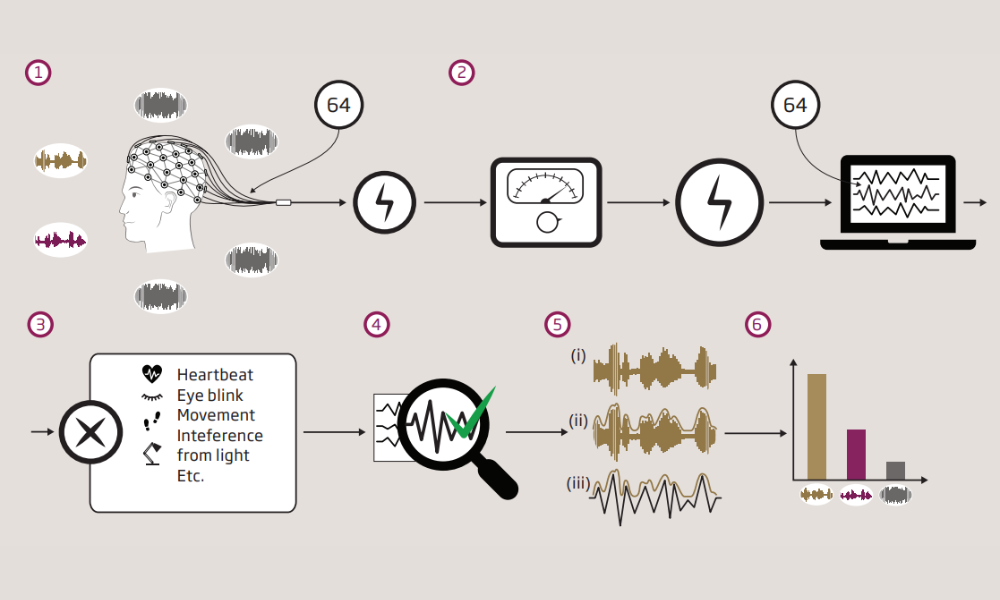

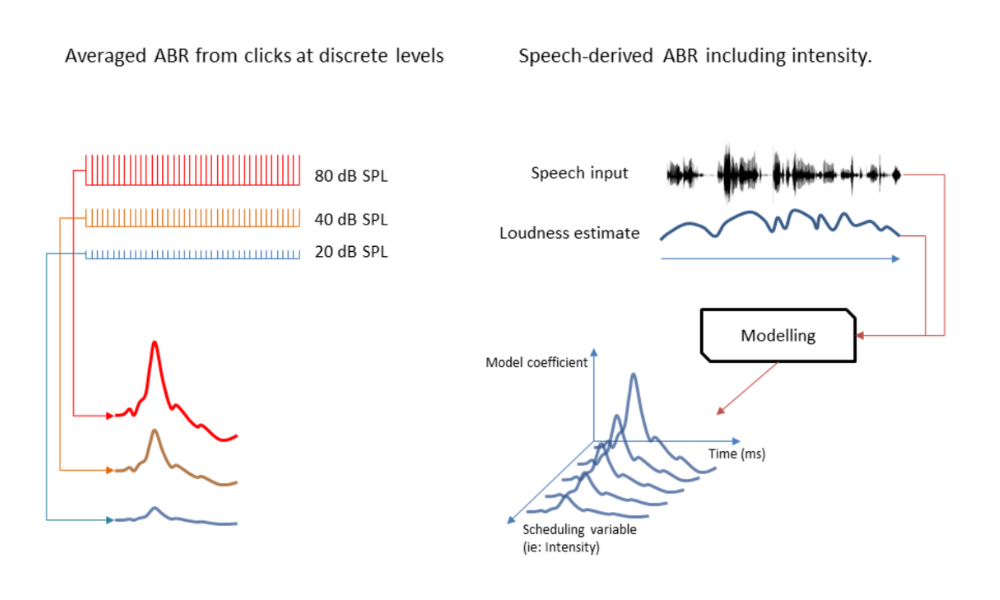

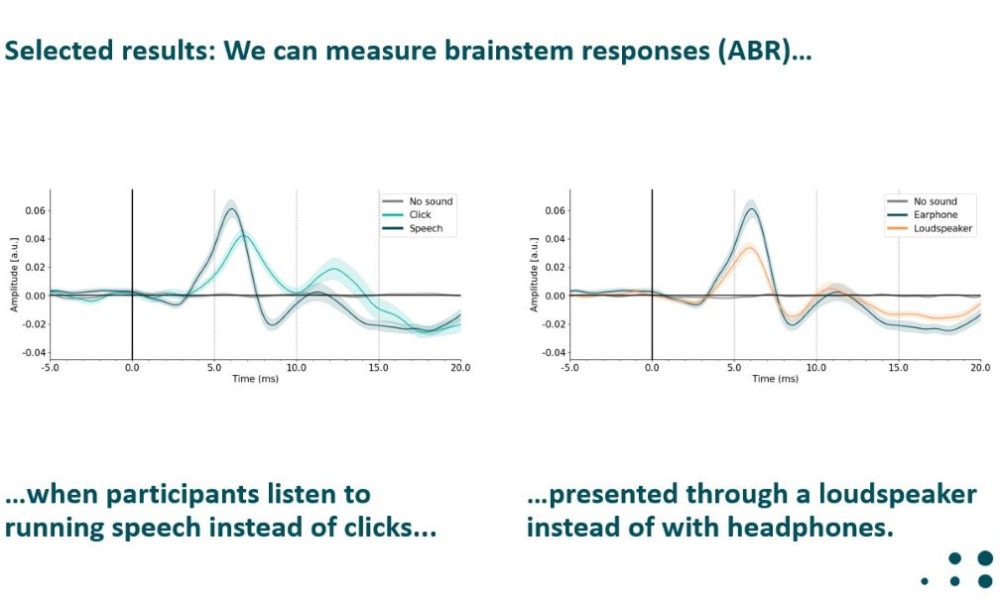

We are developing advanced computational methods called neural speech tracking. This method uses a special brain measurement technique EEG to record the brain’s electrical activity. EEG lets us observe how the brain signals track and understand different speakers. These methods can explain how different language elements are processed in the brain. They allow us to take a closer, more detailed look at the neural representation of speech. This detailed view of neural speech tracking offers a better understanding of language processing, ultimately making social interactions more pleasant for everyone, including those with hearing challenges.