Introduction

Our brain is constantly scanning the environment for potentially relevant information outside our current focus of attention. If sudden sounds appear, our attention may be captured automatically. This can happen for a relevant reason, such as a car approaching while we cross a street, or an irrelevant reason, such as a refrigerator turning on.

While hearing devices should ideally allow attention to be captured by relevant sounds, they should attenuate irrelevant sounds to avoid distraction. But how can we tell what is relevant and what is not? Can we tell how much support hearing aid users need in that regard?

Aims

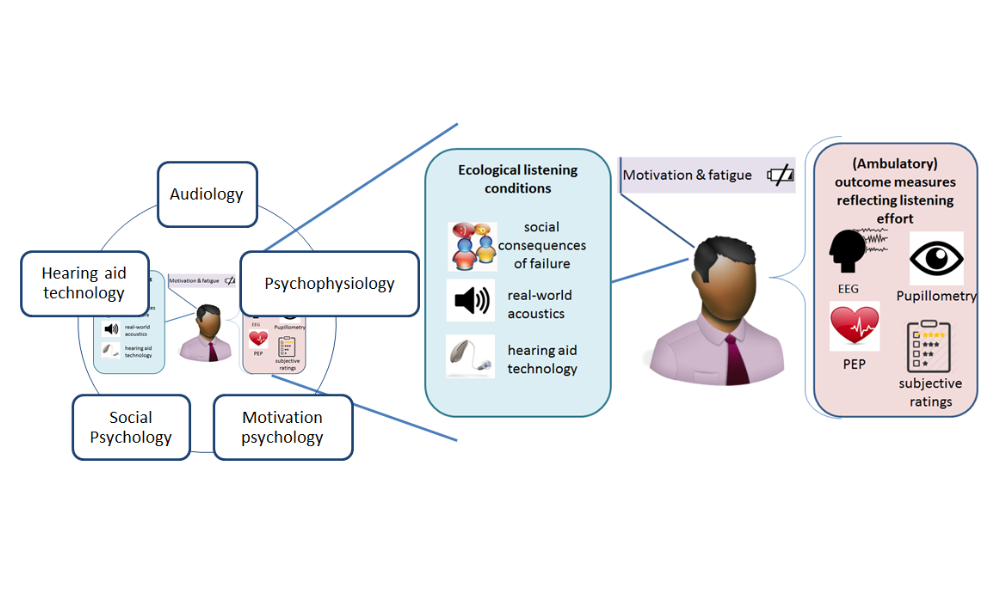

This project aims to find neurophysiological and behavioral responses to both relevant and irrelevant sounds. These responses should indicate how strongly attention is captured by these sounds and how easy it is for a listener to distinguish relevant from irrelevant sounds. Eventually, the difference between the responses to relevant and irrelevant sounds should indicate how well hearing devices support the brain: ideally, the response to relevant sounds should be relatively strong and the response to irrelevant sounds should be relatively weak. That means that the difference between the responses to relevant versus irrelevant attention-capturing sounds should be maximized in an ideal hearing aid.

In the experiments, participants are asked to listen to an audiobook (target speech), while additional sounds (distractors) are played at unpredictable points in time. The neurophysiological response is recorded with an eyetracker (pupil size) and electroencephalography (EEG)

Methodology

We use established neurophysiological measures for auditory attention and effort: electroencephalography and pupillometry. In electroencephalography, it has been shown that the strength of the response highly depends on attention: attended sounds lead to a stronger response than ignored sounds. In pupillometry, it has been shown that the pupil response depends on how much cognitive effort is spent on a task.

In the experiments, participants will try to focus on a particular voice, while different sounds occur in the background. The neurophysiological measures may give us valuable insights about what is happening in the background, something that we cannot measure in previous experimental paradigms.